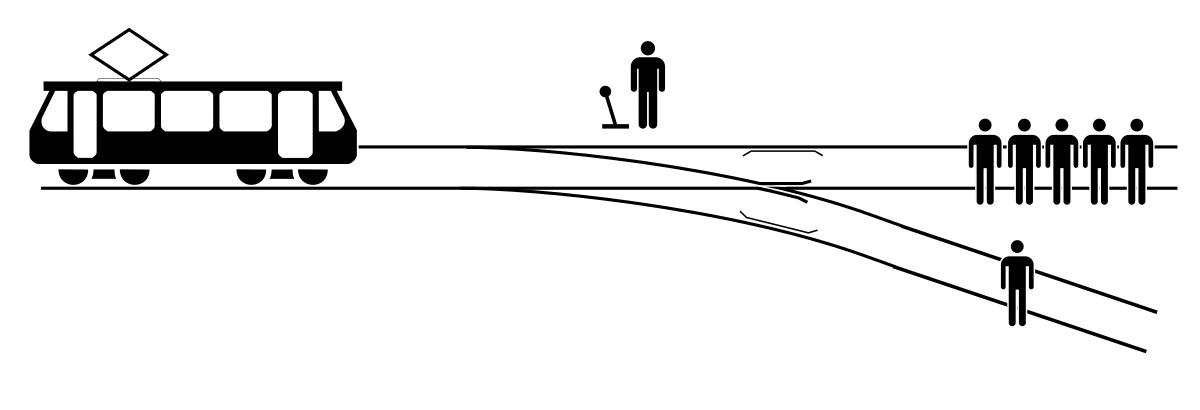

Has anyone/big YouTubers ran a trolley problem with FSD Beta?

Havent found anything and im sure it would be a nice viral video if someone makes it (would probably cost a lot and require a stunt driver etc to make it realistic)

Really curious as how impossible scenarios are programed with FSD neural nets or if there is some sort of "governing ethics" code that is actually running (or if there is nothing running and FSD beta just gives up).

Things like two unavoidable crashes scenarios of the top of my head:

-one person/multiple people

-car vs human

-human vs animal

-car vs bus

etc

Havent found anything and im sure it would be a nice viral video if someone makes it (would probably cost a lot and require a stunt driver etc to make it realistic)

Really curious as how impossible scenarios are programed with FSD neural nets or if there is some sort of "governing ethics" code that is actually running (or if there is nothing running and FSD beta just gives up).

Things like two unavoidable crashes scenarios of the top of my head:

-one person/multiple people

-car vs human

-human vs animal

-car vs bus

etc