Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Tesla, TSLA & the Investment World: the Perpetual Investors' Roundtable

- Thread starter AudubonB

- Start date

Fact Checking

Well-Known Member

new record Netherlands.

Monday deliveries.

Model 3 = 648

Model S = 15

Model X = 10

Total = 673

And the last Fremont ship heading for Europe hasn't even arrived yet: the "RCC Europe" with 3k-4k Model 3's is expected to reach Amsterdam port in 3 days:

(The ship is in the lower left corner of the map.)

oh boyAnd the last Fremont ship heading for Europe hasn't even arrived yet: the "RCC Europe" with 3k-4k Model 3's is expected to reach Amsterdam port in 3 days:

(The ship is in the lower left corner of the map.)

RobStark

Well-Known Member

ReflexFunds

Active Member

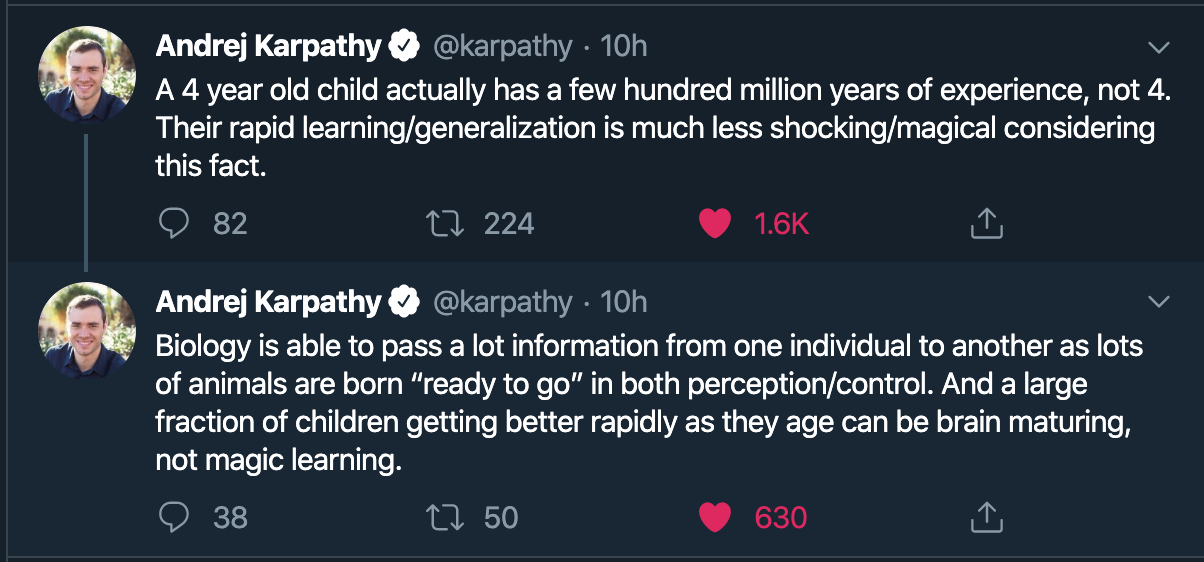

Karpathy is actually making an extremely important point here. Andrej and Elon’s shared views on this topic are a key driver of why Tesla’s Robotaxi AI strategy is so different from the competition.

Andrej and Elon acknowledge just how difficult it is to solve driving with AI and how much of a head-start human drivers have. In contrast Waymo and everyone else going with their Data Light, Hardware Heavy strategy are instead trivialising just how much learning it takes to become competent at driving and how much data it will take to catch up with humans.

Views on exactly how human and animal learning work still vary greatly, but i’d say most common in the AI community is Yan Lecun’s view: “If intelligence is a cake, the bulk of the cake is unsupervised learning, the icing on the cake is supervised learning, and the cherry on the cake is reinforcement learning.”

Where broadly:

- Unsupervised Learning - Learning patterns and correlations between actions and consequences as you begin to interact with the world.

- Supervised Learning - Children asking questions and getting answers about the world.

- Reinforcement learning - Rewards for good behaviour or correct answers.

However this is only part of the story and potentially only a relatively small part.

A large amount of the behaviour of animals is not actually learned during the animal’s life but is driven by behaviour algorithms present at birth. For example animals are born with innate abilities such as Spiders ability to hunt from birth, or mice's inherited burrowing techniques. Many animals are also born with extremely effective unsupervised learning algorithms that allow them to learn specific tasks very quickly with only a few unsupervised examples.

There is obvious evolutionary pressure for animals to be born with abilities that aid survival or an ability to learn to recognise food and predators quickly.

These innate behaviours and learning abilities are learned via hundreds of millions of years of training via natural selection. Natural selection is effectively a reinforcement learning algorithm which is rewarded when an animal produces offspring. However, learning via natural selection is very inefficient and is limited by 1) Very little useful information is transmitted from animals life - we only know whether or not the animal survived long enough to produce offspring, 2) Very little information can be stored in the genome.

Reinforcement learning via natural selection takes place via genes that encode particular circuits and connections of neurons corresponding to particular innate behaviours plus architectures for extremely effective learning algorithms to allow continued learning through the animals life. However the human genome only contains 1 GB of data and there is not enough storage capacity for the exact weights and wiring of a brain’s neurons to be specified in the genome. Instead the genome has to specify a set of rules for how to wire the brain as it develops. This is possibly one of the key reasons humans have developed relatively general intelligence. Given the limited amount of information that can be stored in the genome, there is pressure to develop very general patterns and algorithms which can be used for multiple applications. https://www.nature.com/articles/s41467-019-11786-6.pdf This is called the “genomic bottleneck” and potentially the very small size of the human genome has been a key driver of human intelligence - relative to for example the lungfish with a 40x larger genome and much less pressure to develop generalised algorithms.

To some extent this learning via natural selection can be thought of analogous to a more powerful form of pre-training or transfer learning used in many machine learning applications today.

It is important to note though that natural selection develops strong neural network architectures and wiring rules rather than optimising neural network weights.

There is some very interesting recent work on “weight agnostic” artificial neural networks, showing that if you carefully select the network architecture for a particular task, the neural network can actually perform some reinforcement learning tasks with fully randomised weights. https://arxiv.org/pdf/1906.04358.pdf This shows just how powerful the network architecture can be itself even before you start training on data.

So back to Robotiaxis - when we are trying to teach a car how to drive, in reality we are competing with a human who already has 100s of millions of years of data and reinforcement learning via natural selection and 20+ years of Unsupervised Learning, Supervised Learning and Reinforcement Learning via interaction with the world and teachers.

This is why a Robotaxi will need 10s of billions of miles of real world driving experience vs a human who can learn to drive with 1,000 miles of real world driving lessons. And this is why Waymo and every other potential Robotaxi competitor is wrong when they assume they can learn enough just from a few hundred extremely expensive test vehicles and 10 million+ miles of real world

So Tesla's Robotaxi strategy is built from the assumptions:

1. We cannot solve Robotaxis without 10 billion+ miles of real world experience.

2. We cannot get 10 billion+ miles of real world experience without a hardware suite affordable to install in a normal consumer owned car.

3. We cannot get Lidar this cheap in a reasonable timeframe and without the economies of scale of first having a functioning Robotaxi business.

4. Therefore we cannot use Lidar.

5. Hence we have to solve distance and velocity estimates using machine learning with Cameras and Radar data. If we can do this, Lidar has no extra value anyway as its capabilities will only be a subset of what we can already do with Machine Vision.

Last edited:

That will be difficult if hot air risesI fart in the general tslaq direction.

OMG, Business Insider is run by freaking morons.

A SpaceX rocket lost its nosecone during an otherwise successful launch in Florida

...

A SpaceX rocket lost its nosecone during an otherwise successful launch in Florida

...

OMG, Business Insider is run by freaking morons.

A SpaceX rocket lost its nosecone during an otherwise successful launch in Florida

View attachment 489158

...

View attachment 489159

Double facepalm is not enough. I need two faces.

This is only half true at best. A high stock price means that Tesla can borrow more at lower terms.It's not benefiting Tesla if not IPO or SO - if you're just buying from the market.

So, why not hedge, I don't see a problem.

Karpathy is actually making an extremely important point here. Andrej and Elon’s shared views on this topic are a key driver of why Tesla’s Robotaxi AI strategy is so different from the competition.

Andrej and Elon acknowledge just how difficult it is to solve driving with AI and how much of a head-start human drivers have. In contrast Waymo and everyone else going with their Data Light, Hardware Heavy strategy are instead trivialising just how much learning it takes to become competent at driving and how much data it will take to catch up with humans.

Views on exactly how human and animal learning work still vary greatly, but i’d say most common in the AI community is Yan Lecun’s view: “If intelligence is a cake, the bulk of the cake is unsupervised learning, the icing on the cake is supervised learning, and the cherry on the cake is reinforcement learning.”

Where broadly:

- Unsupervised Learning - Learning patterns and correlations between actions and consequences as you begin to interact with the world.

- Supervised Learning - Children asking questions and getting answers about the world.

- Reinforcement learning - Rewards for good behaviour or correct answers.

However this is only part of the story and potentially only a relatively small part.

A large amount of the behaviour of animals is not actually learned during the animal’s life but is driven by behaviour algorithms present at birth. For example animals are born with innate abilities such as Spiders ability to hunt from birth, or mice's inherited burrowing techniques. Many animals are also born with extremely effective unsupervised learning algorithms that allow them to learn specific tasks very quickly with only a few unsupervised examples.

There is obvious evolutionary pressure for animals to be born with abilities that aid survival or an ability to learn to recognise food and predators quickly.

These innate behaviours and learning abilities are learned via hundreds of millions of years of training via natural selection. Natural selection is effectively a reinforcement learning algorithm which is rewarded when an animal produces offspring. However, learning via natural selection is very inefficient and is limited by 1) Very little useful information is transmitted from animals life - we only know whether or not the animal survived long enough to produce offspring, 2) Very little information can be stored in the genome.

Reinforcement learning via natural selection takes place via genes that encode particular circuits and connections of neurons corresponding to particular innate behaviours plus architectures for extremely effective learning algorithms to allow continued learning through the animals life. However the human genome only contains 1 GB of data and there is not enough storage capacity for the exact weights and wiring of a brain’s neurons to be specified in the genome. Instead the genome has to specify a set of rules for how to wire the brain as it develops. This is possibly one of the key reasons humans have developed relatively general intelligence. Given the limited amount of information that can be stored in the genome, there is pressure to develop very general patterns and algorithms which can be used for multiple applications. https://www.nature.com/articles/s41467-019-11786-6.pdf This is called the “genomic bottleneck” and potentially the very small size of the human genome has been a key driver of human intelligence - relative to for example the lungfish with a 40x larger genome and much less pressure to develop generalised algorithms.

To some extent this learning via natural selection can be thought of analogous to a more powerful form of pre-training or transfer learning used in many machine learning applications today.

It is important to note though that natural selection develops strong neural network architectures and wiring rules rather than optimising neural network weights.

There is some very interesting recent work on “weight agnostic” artificial neural networks, showing that if you carefully select the network architecture for a particular task, the neural network can actually perform some reinforcement learning tasks with fully randomised weights. https://arxiv.org/pdf/1906.04358.pdf This shows just how powerful the network architecture can be itself even before you start training on data.

So back to Robotiaxis - when we are trying to teach a car how to drive, in reality we are competing with a human who already has 100s of millions of years of data and reinforcement learning via natural selection and 20+ years of Unsupervised Learning, Supervised Learning and Reinforcement Learning via interaction with the world and teachers.

This is why a Robotaxi will need 10s of billions of miles of real world driving experience vs a human who can learn to drive with 1,000 miles of real world driving lessons. And this is why Waymo and every other potential Robotaxi competitor is wrong when they assume they can learn enough just from a few hundred extremely expensive test vehicles and 10 million+ miles of real world

So Tesla's Robotaxi strategy is built from the assumptions:

1. We cannot solve Robotaxis without 10 billion+ miles of real world experience.

2. We cannot get 10 billion+ miles of real world experience without a hardware suite affordable to install in a normal consumer owned car.

3. We cannot get Lidar this cheap in a reasonable timeframe and without the economies of scale of first having a functioning Robotaxi business.

4. Therefore we cannot use Lidar.

5. Hence we have to solve distance and velocity estimates using machine learning with Cameras and Radar data. If we can do this, Lidar has no extra value anyway as its capabilities will only be a subset of what we can already do with Machine Vision.

When I saw this I had similar thoughts. Genes encode the shape of the neural net, the learning from animal's life experience is on top, some things like play behaviours are genetic to enhance the learning. For FSD humans have encoded the shape of the neural net, which is then trained with billions of miles of driving data.

For better FSD they could use genetic algorithms to improve the shape (number of layers, size of layers, interconnections, etc.) of the artificial neural net, with the aim of reducing training and improving driving behaviour. They can also add things like smart summon to improve the training data acquisition.

The problem is that you then become a TSLAQ and block every person that posts negatively, which isn't productive. There's no really good solution other than for Tesla to just keep to the plan.There is little doubt in my mind that Spiegel is right here on TMC posing as one or more unhappy Tesla owner(s), besmirching their name and trying to dissuade people from purchase. It wouldn't surprise me if he had 10-20 different aliases. A bunch of his cohorts are here too, all under false pretenses. IMO, it's fraud.

It boggles my mind why TMC management doesn't take care of this problem.

Last edited:

They'd need a rapidly-tested cost metric; genetic algorithms require lots of cycles (and remember, to evaluate the cost metric you have to first train each neural net in the population). There's also some options apart from differential evolution that involving slope following, which converge faster but are more likely to get stuck in local minima. And hybrids thereof, to try to "jump out" of local minima. The optimal choice depends on the problem you're solving.

When I saw this I had similar thoughts. Genes encode the shape of the neural net, the learning from animal's life experience is on top, some things like play behaviours are genetic to enhance the learning. For FSD humans have encoded the shape of the neural net, which is then trained with billions of miles of driving data.

For better FSD they could use genetic algorithms to improve the shape (number of layers, size of layers, interconnections, etc.) of the artificial neural net, with the aim of reducing training and improving driving behaviour. They can also add things like smart summon to improve the training data acquisition.

They'd need a rapidly-tested cost metric; genetic algorithms require lots of cycles (and remember, to evaluate the cost metric you have to first train each neural net in the population). There's also some options apart from differential evolution that involving slope following, which converge faster but are more likely to get stuck in local minima. And hybrids thereof, to try to "jump out" of local minima. The optimal choice depends on the problem you're solving.

Tslynk67

Well-Known Member

Oh my oh my oh my. i wouldn't have thrown the kitchen sink at it, but definitely some appliances would have gone.

Any other good deal like that flying around?

The Jan 21 $650’s were also $1 and thanks to a heads-up on this forum - apologies, I don’t remember whom - I bought 10, which are currently 890% up.

Now my problem is what to do with them... I’m thinking to roll into Jan 2022’s which are 2.5x the price, but a lot more time value...

Any suggestions?

JRP3

Hyperactive Member

I certainly didn't know but was able to keep buying almost all the way down, selling some other stocks and using some margin. Definitely took some short term risk.Exactly. Unfortunately, I had my margin maxed out by then thinking that the good deals were happening long before that.

IDK how the hell people knew to wait for $180.

Well, despite my paranoia which got me (a lot) more Disagrees than I can honestly say I'm comfortable with, I've been holding through these levels, making 45% return in 5 months and not planning on selling until Tesla surpasses Amazon and Apple in size. If you ask me which company is the most important to our future and which is the most innovative, the answer is pretty obvious. I'm now an unsanctioned Tesla spokeperson for our small 17k member FB group.

This is long overdue but I have to admit that, although the stock's volatility made me a speculator, its fundamentals, most of which is presented here in this forum, turned me into an investor. Thank you.

My friend, who is a now-departed Tesla middle manager has nothing but good things to say about Elon. They still keep 100% of their Tesla stocks (a very high number). They're fairly certain that FSD is the game changer at Tesla, more than batteries, SCs, motors, etc.

This is long overdue but I have to admit that, although the stock's volatility made me a speculator, its fundamentals, most of which is presented here in this forum, turned me into an investor. Thank you.

My friend, who is a now-departed Tesla middle manager has nothing but good things to say about Elon. They still keep 100% of their Tesla stocks (a very high number). They're fairly certain that FSD is the game changer at Tesla, more than batteries, SCs, motors, etc.

Last edited:

Dan Detweiler

Active Member

OK, time to come clean...

I am an absolute idiot...in regard to some things. I have virtually no knowledge of many things that impact my life. I will say that I consider myself very knowledgeable in some regards, most of which no longer have any impact on my financial well being. Things like teaching music I can have a very in depth, knowledgeable discussion about. Stock however, is definitely not in my wheelhouse of enlightened perception.

A good friend of mine has been telling me how crazy I am to be involved in TSLA for a couple of years now. He is, unlike me, VERY well schooled in everything stock market. He follows the charts religiously. He looks at all the trends. He follows all of the best advice regarding his investments. He is glued to a company's "fundamentals" (I still don't really understand what that is precisely). In short, he is WAY out of my league when it comes to the financial markets. He is genuinely concerned for me that I am so invested in such a fraud that is lead by such a criminal as Elon. (Yes, those are his words.)

Shorting, hedging, puts, calls, charts, "Max Pain" (what the hell is that anyway?) This stuff is all Greek to me and I don't understand it one bit. So, why the hell do I own TSLA? Great question. Well, all I can say is this. I heard about the company back when it was just the Roadster and the Model S. I liked what they said they were trying to do. I could never afford their products, but were amazed by what they were capable of. I test drove a Model S. "OMG this thing is incredible!" They announced the Model 3. On a whim, I made a reservation just because it made me feel good. I bought a little stock. First time ever buying any stock of any kind. I had a two year wait before anything went final so I started doing some more research. "Who the hell are these people that call themselves TSLAQ?" "Is the company really a fraud?" "Is it all a lie?" Two years later I get the email...time to configure or cancel. By that time my little investment has earned enough for a sizable down payment. I go for it and sell my TSLA shares. Get the car...game over.

6 months into ownership and I have a little money to play with. I get back in. About a year later I have the opportunity to purchase some land for our dream retirement home. Yup, you see where I'm going. Made enough on TSLA for the purchase. I leave a small number of shares untouched. In the weeks since the land purchase the stock has skyrocketed and is making me more money. By the time my Cybertruck reservation (yeah, I jumped on that bandwagon too) comes to delivery, I hope to have made a chunck for another sizable down payment for it. TSLA has made it possible for me to have things I never would have been able to have without it.

So...my investment philosophy, coming from a guy that knows absolutely nothing about how the market works? Find a company you believe in. Check it out. Experience what they offer. Then, if it feels right and you have some discretionary funds...pull the trigger and then ride it out knowing you are supporting a company that makes sense for you. Yeah, you could lose everything, but if you know that is a possibility going in, it shouldn't hurt as bad if it all goes belly up. The upside?...well, for me it has been amazing!

My friend still knows WAY more than me about investing to make money. But, I don't think he gets what it feels like to be part of something you really believe in. He STILL thinks I am idiot for investing in Tesla. LOL!

Definitely, unequivocally, without a doubt NOT, IN ANY WAY an advice!

(feeling really happy about my little investment though)

Dan

I am an absolute idiot...in regard to some things. I have virtually no knowledge of many things that impact my life. I will say that I consider myself very knowledgeable in some regards, most of which no longer have any impact on my financial well being. Things like teaching music I can have a very in depth, knowledgeable discussion about. Stock however, is definitely not in my wheelhouse of enlightened perception.

A good friend of mine has been telling me how crazy I am to be involved in TSLA for a couple of years now. He is, unlike me, VERY well schooled in everything stock market. He follows the charts religiously. He looks at all the trends. He follows all of the best advice regarding his investments. He is glued to a company's "fundamentals" (I still don't really understand what that is precisely). In short, he is WAY out of my league when it comes to the financial markets. He is genuinely concerned for me that I am so invested in such a fraud that is lead by such a criminal as Elon. (Yes, those are his words.)

Shorting, hedging, puts, calls, charts, "Max Pain" (what the hell is that anyway?) This stuff is all Greek to me and I don't understand it one bit. So, why the hell do I own TSLA? Great question. Well, all I can say is this. I heard about the company back when it was just the Roadster and the Model S. I liked what they said they were trying to do. I could never afford their products, but were amazed by what they were capable of. I test drove a Model S. "OMG this thing is incredible!" They announced the Model 3. On a whim, I made a reservation just because it made me feel good. I bought a little stock. First time ever buying any stock of any kind. I had a two year wait before anything went final so I started doing some more research. "Who the hell are these people that call themselves TSLAQ?" "Is the company really a fraud?" "Is it all a lie?" Two years later I get the email...time to configure or cancel. By that time my little investment has earned enough for a sizable down payment. I go for it and sell my TSLA shares. Get the car...game over.

6 months into ownership and I have a little money to play with. I get back in. About a year later I have the opportunity to purchase some land for our dream retirement home. Yup, you see where I'm going. Made enough on TSLA for the purchase. I leave a small number of shares untouched. In the weeks since the land purchase the stock has skyrocketed and is making me more money. By the time my Cybertruck reservation (yeah, I jumped on that bandwagon too) comes to delivery, I hope to have made a chunck for another sizable down payment for it. TSLA has made it possible for me to have things I never would have been able to have without it.

So...my investment philosophy, coming from a guy that knows absolutely nothing about how the market works? Find a company you believe in. Check it out. Experience what they offer. Then, if it feels right and you have some discretionary funds...pull the trigger and then ride it out knowing you are supporting a company that makes sense for you. Yeah, you could lose everything, but if you know that is a possibility going in, it shouldn't hurt as bad if it all goes belly up. The upside?...well, for me it has been amazing!

My friend still knows WAY more than me about investing to make money. But, I don't think he gets what it feels like to be part of something you really believe in. He STILL thinks I am idiot for investing in Tesla. LOL!

Definitely, unequivocally, without a doubt NOT, IN ANY WAY an advice!

(feeling really happy about my little investment though)

Dan

Facepalms from the future.OMG, Business Insider is run by freaking morons.

A SpaceX rocket lost its nosecone during an otherwise successful launch in Florida

View attachment 489158

...

View attachment 489159

Tslynk67

Well-Known Member

Just a heads-up on an excellent Twitter thread by our own @KarenRei - I'm humbled daily by the intelligence of the posters here and the insight I learn on any number of matters, sometimes even Tesla and investment related!

Nafnlaus on Twitter

And the unroll: Thread by @enn_nafnlaus: "It's recently been in the news that "International Rights Activists", a law firm run by Terry Collingsworth, has sued Google, Microsoft, App […]"

Nafnlaus on Twitter

And the unroll: Thread by @enn_nafnlaus: "It's recently been in the news that "International Rights Activists", a law firm run by Terry Collingsworth, has sued Google, Microsoft, App […]"

Similar threads

- Locked

- Replies

- 0

- Views

- 4K

- Locked

- Replies

- 0

- Views

- 6K

- Locked

- Replies

- 11

- Views

- 10K

- Replies

- 6

- Views

- 5K

- Locked

- Poll

- Replies

- 1

- Views

- 12K