All,

This is my first time to leave a post in this website and I just want to share some experience with you all. My wife was driving the Model Y with FSD driving in the local.

Question: the vehicle was smoking at the first few seconds, but stopped after that. Both two front air bags are deployed. As you can see from pictures below, do you know how Tesla insurance will handle case like this? We are in communicating with the insurance and estimate center, but it just takes time, especially in Holiday season to come.

This is my first time to leave a post in this website and I just want to share some experience with you all. My wife was driving the Model Y with FSD driving in the local.

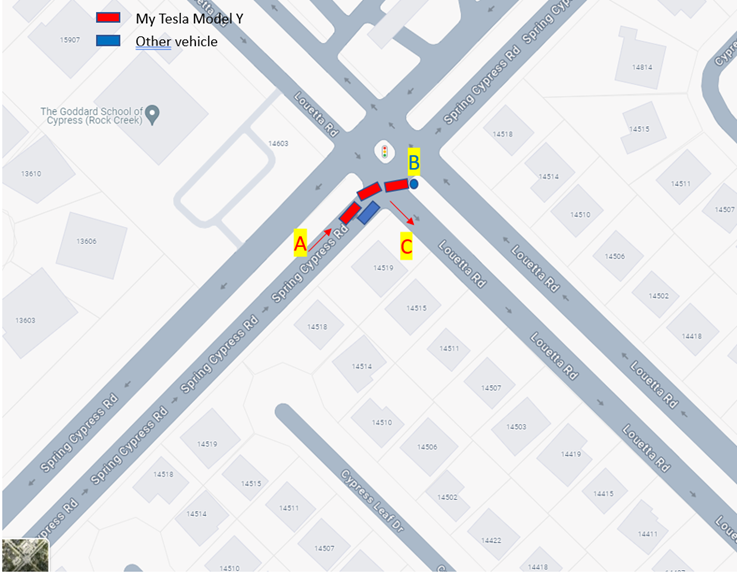

- The vehicle was driving in location A of figure below (left lane of two lanes on Spring Cypress Rd).

- The vehicle was supposed to make a right turn to Louetta Rd position C.

- Because there is another vehicle on its right, my Model Y could not change the lane from left to the right before its turn.

- However, per the map, my Model Y needs to make its right turn at the corner; it is not supposed to do so, because only the right lane can make the right turn.

- At the corner, FSD was thinking hard about what to do before its turn. After the other vehicle passed, it decided to make the right turn.

- After the right turn at a very low speed, it speeds up hitting into a community sign (position B in the Figure) causing the collision. The computer thought that was a lane to drive, but it is not.

Question: the vehicle was smoking at the first few seconds, but stopped after that. Both two front air bags are deployed. As you can see from pictures below, do you know how Tesla insurance will handle case like this? We are in communicating with the insurance and estimate center, but it just takes time, especially in Holiday season to come.

/cloudfront-us-east-2.images.arcpublishing.com/reuters/SI7QOPAK5FIPJM62WPBPIJDRQY.jpg)