I have had enough experience now with FSD 12 to say that in my opinion, I believe Ashok is correct:

I would also stress we are at the beginning of the end. That is, we will hopefully now start to see significant improvement with the "known FSD issues" and "corner case disengagements" with every major FSD release. "The end" (level 3 /4 autonomy within a wide Operational Design Domain (ODD)) is almost certainly year+ away. I'd like to document, just from one consistent anecdotal use case that can be repeated over time, where we are "starting from."

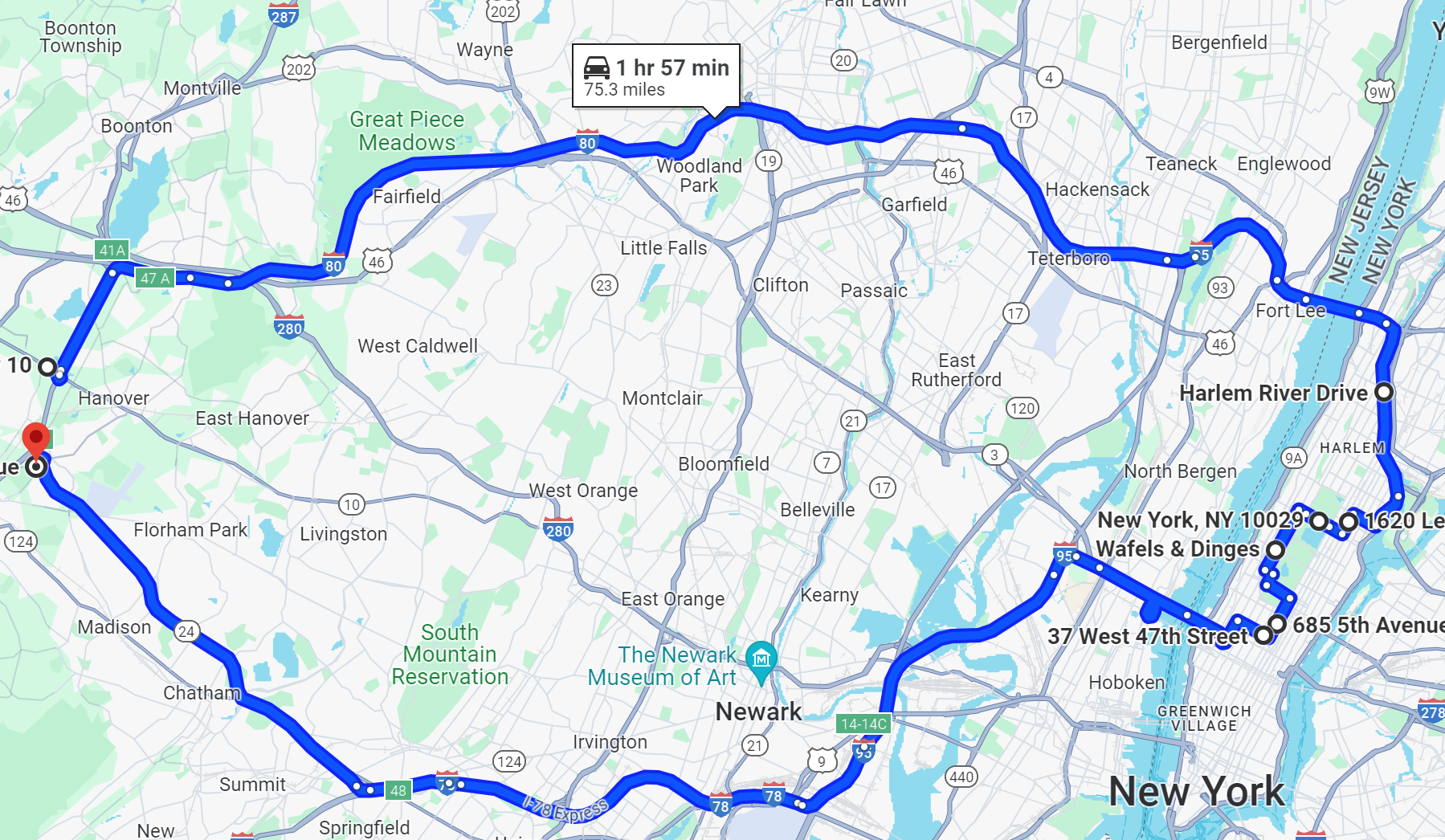

I will be driving a 90ish mile (2-3 hrs) "loop" under FSD to cover a range of driving scenarios. (Mileage is approximate):

For privacy reasons, there are about 15 miles in my loop that are not included in the link above that takes me to/from my actual home.

Here is a Link to inspect the route in detail

This route takes me from NJ Suburbs into and out of Manhattan (NYC) and includes approximately:

I will report on:

Although I have driven FSD regularly over the past 3 weeks...I have yet to take it into NYC.

Vehicle: Refresh Model S (2023), Vision Only, HW4. First test will be using:

Firmware version: 11.1 (2024.3.15)

FSD Version: 12.3.4

So...there's the set-up. I expect later today to drive the first loop.

I would also stress we are at the beginning of the end. That is, we will hopefully now start to see significant improvement with the "known FSD issues" and "corner case disengagements" with every major FSD release. "The end" (level 3 /4 autonomy within a wide Operational Design Domain (ODD)) is almost certainly year+ away. I'd like to document, just from one consistent anecdotal use case that can be repeated over time, where we are "starting from."

I will be driving a 90ish mile (2-3 hrs) "loop" under FSD to cover a range of driving scenarios. (Mileage is approximate):

For privacy reasons, there are about 15 miles in my loop that are not included in the link above that takes me to/from my actual home.

Here is a Link to inspect the route in detail

This route takes me from NJ Suburbs into and out of Manhattan (NYC) and includes approximately:

- 10 Miles Suburban driving

- 65 Miles "limited access highway" driving (This should be using the FSD 'highway stack' which is not the same as the FSD 12 stack)

- includes interchanges

- Includes Tolls

- Includes Tunnel

- 8 miles of other "highway type driving", (will probably fall under the FSD 12 stack)

- 6 miles of dense city driving...including areas around Times Square, Rockefeller center, etc which will have dense vehicle and pedestrian traffic.

I will report on:

- Interventions (Accelerator presses, particularly if safety related)

- Disengagements (comfort or safety related)

- Overall impressions

- Smart Summon or "Banish"..so what I call the "first 100 yards and final 100 yards" is not available to test. (Drop offs / pick-ups).

- "Reversing" while on FSD is not yet supported

- "Lane Selection Wobble"...for example, approaching an intersection where the single driving lane splits into multiple lanes (turning vs. straight)...FSD may act "indecisively"

- Unprotected turn (stop sign) behavior. Notably: stops early....then creeps. If no cars detected it may creeps into intersection instead of "just going". Further, if it has creeped into the intersection, THEN detects a car approaching, it may still hesitate and require intervention (accelerator press) to get it going.

Although I have driven FSD regularly over the past 3 weeks...I have yet to take it into NYC.

Vehicle: Refresh Model S (2023), Vision Only, HW4. First test will be using:

Firmware version: 11.1 (2024.3.15)

FSD Version: 12.3.4

So...there's the set-up. I expect later today to drive the first loop.

Last edited: