Yeah, I saw Mr.

@jjrandorin's post earlier today, but was in the middle of diagnosing fried inductors

.

So, I'm going to attempt to summarize all the stuff that's come above and perhaps make it all a bit clearer. And, in the process, give a solid understanding of what's going on with all the Power Conversion Stuff, anyway?

So, let's start off on the correct foot: What we're attempting to do is move energy from City Power to the Battery. The

rate at which energy gets moved from point A to point B is measured in Watts. (We got a lot of dead early researcher names floating around here.. Watt, Joule, Ohm, Ampere, etc.)

Just to make sure that we speak in clear language, I'm not going to play around with the words, "kilowatt hour" much. That's a weird one put together by a bunch of power companies back in the day. Let's stick with Joules. A Joule is a unit of energy, the symbol is "J". There's 3.6 MJ in a kW-hr.

A Watt is a

rate, like gallons per minute. In fact, the definition of a Watt is, exactly, 1 J/s. That 100W lightbulb in the fixture to your left? Right, it's using 100J of energy each and every second. A lump of coal, when burnt, gives off 23.9 MJ per kg; power companies pay for coal, they burn the stuff, that boils the water, that turns the turbine, that spins the generator, that generates the energy.

You all pay for the energy which is directly attributable to that lump of coal, and you pay your fair share to the electric company.

Fine. Great. So, the number of Watts flowing on electric wires is the rate of energy transfer from the power company to wherever it is we want it.

So, Mr. Ohm was interested in this flow of energy and spent a ridiculous amount of time characterizing these flows across different materials. Turns out, it wasn't easy figuring out it all, but, eventually, he came up with what we call Ohm's Law: Voltage = Current * Resistance in a circuit. Further, Power = Voltage * Current. (Note: These equations are great when one is playing around with electromagnetic fields in and around conductors, but it tends to break when energy gets transferred into the Ether. Maxwell's equations cover

everything, including photons traveling through free space, but it's a

lot simpler to stick with Mr. Ohm if one is playing with wires.)

Let's define current. An Ampere is defined as the movement of one Columb of electrons per second through a conductor. A Columb, for those of you who don't remember Middle School chemistry, is a number, like, say, a million. Only a Columb is 6.022 x 10^23. Why a Columb? Because a Columb of something has a mass, in grams, of the atomic weight of whatever-it-is. Atomic weight of Iron (FE) is 55.84; so a Columb of that material would weigh 55.84 g.

Let's talk about that resistance a bit. Let's say that we have a conductor. Further, let's talk about metal conductors. The

point about a metal conductor is that it's an array of atoms that are embedded in a gas of free-moving electrons. At room temperature, silver turns out to be the material where the electrons in the conduction band flow the easiest, in that they're not coupled very much to the atomic nuclei in the conductor. However, nothing's perfect: When current is flowing in silver, the occasional electron is going to nail the silver atom (with all the electrons that

aren't in the conduction band) dead on, at speed: This makes the silver atom bounce around and, well, that's temperature. Electrons change orbitals as a result, then head back to their original spots, giving off photons of infrared as they go; those photons get absorbed by other silver atoms, get radiated away, and so on: But run a big enough current through ye silver wire and it's going to get

hot. Hot enough, and it melts. And/or vaporizes. And, in any case, converting electrical power to heat energy doesn't do a heck of a lot of good about depositing energy into a battery: It's a loss, and we like not having losses.

So, those of us who muck with conductors have a term, called the resistivity. Like many other fundamental terms, the EE's have co-opted a Greek letter for this term, rho. The units of this beast are in Ohm-meters. Why that? Because the resistance of a straight conductor is

R = rho * (length of the conductor)/(Cross-Sectional Area of the conductor).

Rho for copper, a decent conductor, is 16.8e-9 Ohm-meters. Rho for silver, a better, but rarer (and more expensive) conductor is 15.9e-9 Ohm-meters. Gold, which doesn't corrode, but is

really expensive is 22.14e-9 Ohm-meters. Everybody tends to use copper.

So, why high tension lines? Suppose that we're trying to move 250 kW from point A to point B. Let's pick a voltage, any voltage. Let's try.. 1 V!

Since P = V*I, that means that I to carry this voltage on by would be 250e3/1.0 = 250e3 Amps. Yeah. Suppose we want to carry this a kilometer, and we'd like to lose, say, 0.1% of the power being transferred to heat in the wire. So, the current being the same in the wire everywhere, we can take the power in the wire as P = I * V, but V = I * R (Ohm's law), and we get the loss in the wire as P + I * (I*R) = I^2*R. So, 250 kA, we'd like to only lose 0.1%, so the maximum power we'd like to dissipate would be 250 kW * 0.001 = 250W. And the resistance would have to be R = P/(I*I), or 250W/(250e3*250e3) = 4e-9 Ohms. Four nano-Ohms. Sure.... Well, R = rho*length/(area). We know rho, it's a kilometer long wire, so the area would have to be (in meters)

A = 16.8e-9 * 1000/(4e-9) = 4.2e3 square meters. If that thing was a square bus bar, that's 64 meters on a side. And a kilometer long. I think the accountants would have a problem with that.

So, let's go to the other extreme: Let's run at 100kV, and we'd still like 0.1% losses in that kilometer. First, I = P/V, so 250 kW/100kV = 2.5A. That's a

lot better. Next, we're still losing 250W for 0.1%. But P = I*I*R, so R = 250/(2.5*2.5) = 40 Ohms. Finally, a copper wire, a kilometer long with 40 Ohms across the length would have an area of A = 16.8e-9*1000/(40) = 4.2e-7 meters squared, or (if it was a square wire) 648e-6 m across, which is 0.648 mm across. That's roughly an 20 AWG wire. The accountants won't get as mad.

So, why aren't we running 100 kV everywhere and knocking out losses?

- Corona discharge. Not mentioned in any of the above is the Electric Fields around those wires. It's typically measured in Volts/Meter. These fields terminate on the wire at one end and ground at the other; and they're strongest right at the surface of the wire. With a 20 GA wire, the electric field is strong enough to rip the electrons right off the molecules in the air. This makes for a really pretty light show and more losses. For that reason, one will notice that many high tension wires are physically thick, like an inch or two, precisely to cut back on this kind of discharge. The Really High Tension systems have, instead of one conductor, a quad of conductors that are, like, four or five inches to a face, that emulates an even larger physical wire which cuts down on the corona discharge even more.

- Solid insulators aren't that helpful either. A standard pane of window glass, in the presence of an E-field like that, will go, "Snap" and start conducting as the E-fields rip the electrons loose from SiO2. If you've ever looked at a high tension wire and the insulators that hang those wires to the towers.. those insulators are, physically, lllloooonnnnngggg, to reduce the volts per meter from one side of the glass insulator to the other.

- Safety. Not only will those high E-fields rip electrons loose from air molecules, they'd have no problems ripping electrons loose from you. Which is not healthy. There's Reasons why those power switch yards have big fences up with signs saying, "Go No Further".

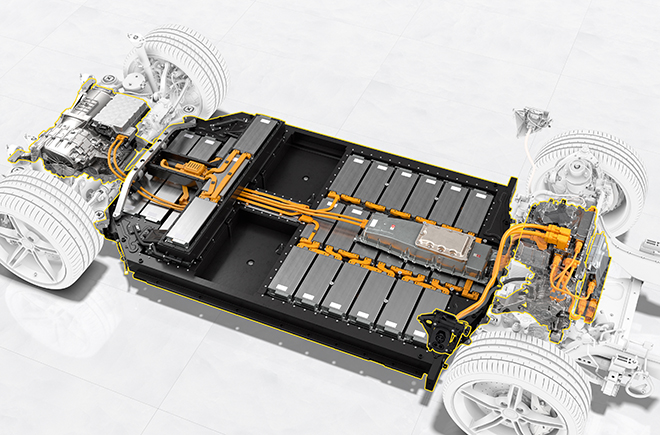

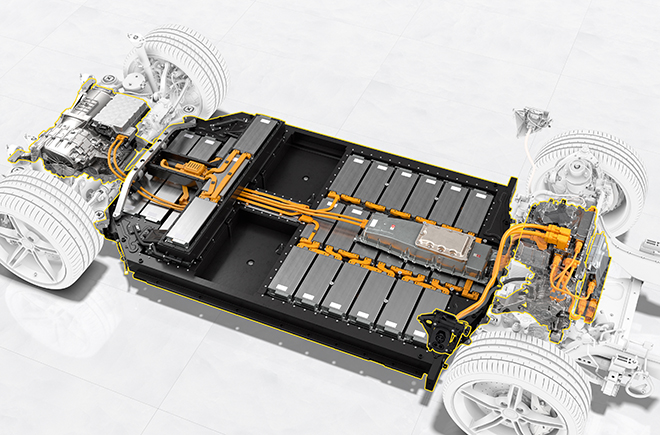

Now that we got the basics cleared, let's go play with Teslas and other BEVs.

First, another factoid. These days, so long as one is below roughly 400V to 500V, there exist highly efficient DC-DC conversion boxes. These functional blocks take in a DC voltage and current; then, using high-voltage, high-current switching transistors, this current is put back and forth through a winding on a very efficient transformer. That's the primary of said transformer. On the other side of the transformer, the secondary, a voltage and current appears there, too; that can be synchronously rectified and filtered to make a second, output, DC voltage and current. By messing around with the switching transistors and the turns ratio on the transformer, one can take in pretty much any arbitrary voltage and current and make another voltage and current, at the same power as the input (minus, roughly 1%-5% due to losses in the transistors and transformer). We're talking 95% to 98% power efficiency here. So long as the switching frequencies are up above 150 kHz or so (and they are: The really advanced stuff gets up to 10 MHz), the physical component sizes are small and the parts are relatively cheap. And it'll all fit into a car, whereas those power transformers one sees on telephone poles and boxes on street corners won't.

Second, bonus factoid: The

battery charger in a BEV is

exactly one of these blocks. DC in from rectifiers hooked to 120VAC or 240 VAC, or from a Supercharger; DC to the battery, out. And the output voltage and/or current of the charger is varied by the battery control module.

(I've heard reports that, with Tesla, the Supercharger DC voltage is varied by commands from the car, rather than there being an actual charger

in the car. Believable, but I haven't been able to find a decent block diagram yet to check. Doesn't change, really, what's to follow, though.)

So, why would 800V be better or worse than 400V, fundamentally? There's pluses and minuses.

800V is twice as high; therefore, the current is half as much at the input to the BEV.

- Pluses

- The current is half as much for the same amount of power, so, copper losses are less.

- Likewise, the current in the switching transistors is half as much, so less power dissipation in them, as well as the primary side windings in the transformers.

- Minuses

- Alrighty, then: 800V is a lot bigger voltage and one has to worry about the insulation. That's some thick insulation on the 400V wires; it has to be twice as thick for 800V, and that's space and money. Maybe enough to negate the use of less copper for 800V vs 400V.

- Switching transistors. So, I play, occasionally, with power transistors designed to handle 100V or so. The higher the voltage that a MOSFET transistor has to handle, the bigger its internal resistance gets to be. That causes additional losses over lower-voltage transistors used in 400V systems. Again, it's I*I*R, but we got half as much current.. But I don't know what R gets to when one bumps the voltage up to 800V. There are ways to keep the efficiency up, though:

- More transistors in parallel. ($$).

- Move to a different type of transistor. There are high-power, semiconductor switching transistors that are made out of Silicon Carbide. Really. ($$$).

- Live with the additional heat (Silicon Carbides are good for that kind of thing). But it's possible that one might need to apply some kind of cooling to those hot switching transistors - liquid, air blowing by, etc. That might not be hard to come by, given the liquid cooling found in every BEV except, apparently, the Leaf. But it'll be ($$), or even ($$$$).

- Safety. Yeah, it's all inside the car and sealed away. And 400V is lethal anyway. But 800V is certainly more lethal. Not that any hot-rodder should be messing around near those big wires in any case. But.. car crashes? Is some safety expert going to decide that keeping the high-tension wires found in an electric switching yard that never undergoes a rear-ender a good idea for cars that, inevitably, will?

So, I dunno. I've seen the comments above about how the current can be modified with the higher voltage in strange ways that results in better battery charging profiles. I.. don't think I agree. I think the main reason for going to the higher voltage is to cut back on the losses and, eventually, the cost of building the car by thinning out the copper and reducing the power consumption needs in the transistors and motor.

www.dcbel.energy

www.dcbel.energy

chargedevs.com

chargedevs.com

www.quora.com

www.quora.com

www.dcbel.energy

www.dcbel.energy