Sorry, you clearly have an agenda, so there is little point in discussing this further. Apparently you are angry at Tesla for not giving you something you wanted, which is fine, but sadly this seems to have overflowed into your assessments of FSD. In what way is it 'laughably bad" that the car is doing 8 miles between DE's, most of which (as I noted) are "embarrassment driven" because the car is being super-cautious? No-one else has ANY form of ADAS system anywhere near to FSD, but apparently you "know" how fast they are supposed to progress on FSD, based on .. WHAT exactly?There are many if you trust the NHTSA reports (which are public). I disagree with the press being loud wrt FSD. Quite the contrary, given the level of this bait and switch scam.

Yes it is nonsense, since your statement was "Yet the early numbers for FSD beta also look good". Now you're talking about miles, but that's irrelevant given the non-disclosed number of incidents - which typically companies with autonomous ambitions would report to the DMV by law. But not Tesla.

I don't think NHTSA is done. They have decided on a strategy, which seem to be "pick on all the unsafe operations". It's a smart strategy, and really the only way forward as I see it. I think NHTSA will keep doing this until Tesla promises that they've fixed it. Since it's impossible to provide such guarantees in an unbounded ODD, NHTSA will get their chance to strike down on this experiment if they chose to. Given Teslas track-record of non-improvement in terms of reliability, this won't likely take too long... <8 miles / DE for over 20 months, it's laughably bad.

No I haven't had a chance to get it delivered to my car and Tesla won't be able too either in the coming 2-3 years. This is something I learned by researching the UNECE R79 amendments and drafts. My car will be six years old by then, and it doesn't even have NoA - and Tesla won't refund. They keep selling FSD in EU to more sheep.

With regards to reliability, I look at the data. At first a looked at the reports by Dirty Tesla and more recently the Community Tracker: Home - it's alarmingly flat miles / DE. 4-8 miles for 30 months of public beta. Not bullish.

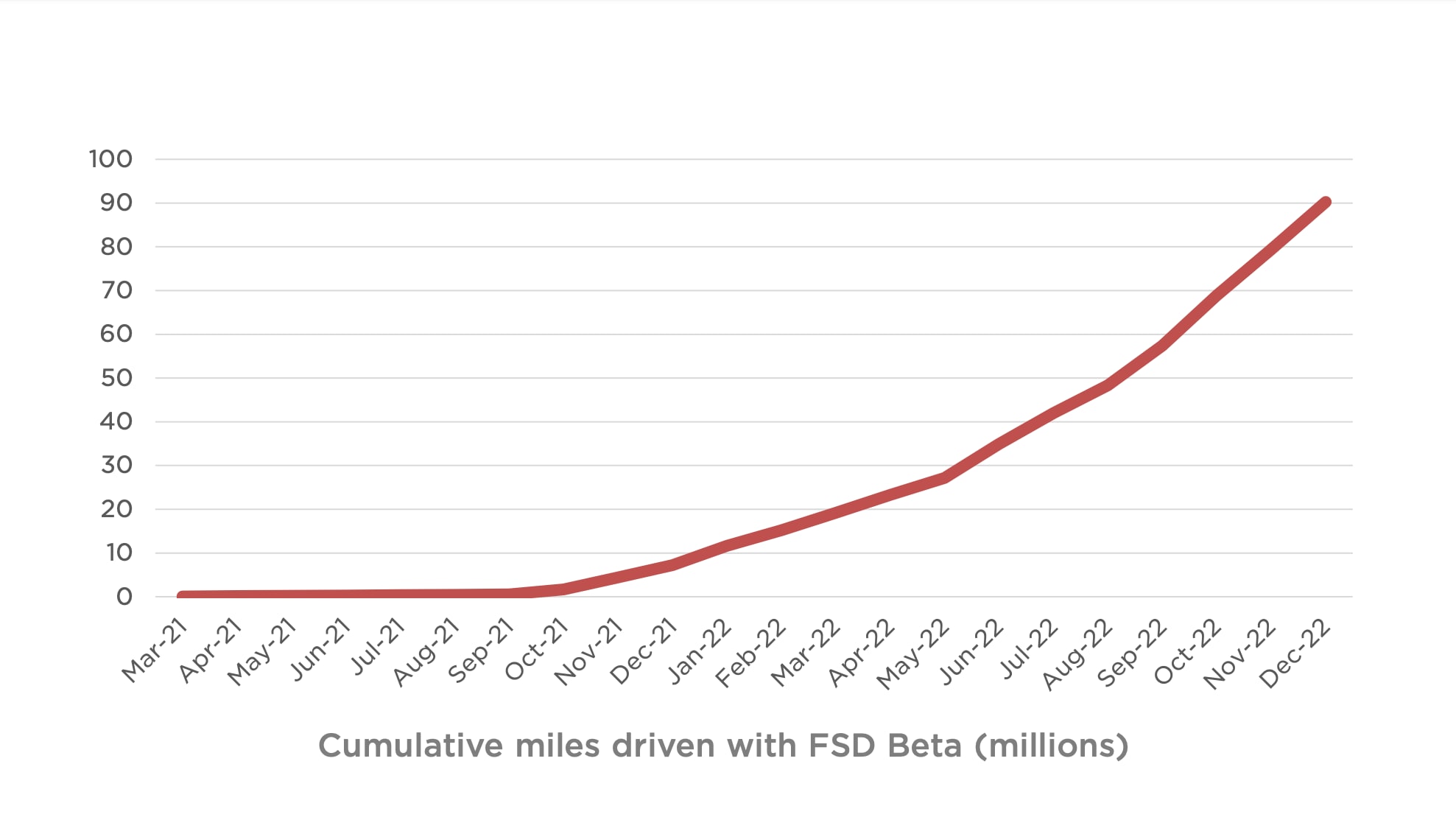

As for mileage, I stand by my statement .. FSD has driven a LOT of miles, so where are all the accidents if it's so bad? And OF COURSE the press will jump on them instantly, as they do all things Tesla. Why would they not? They have ZERO advertising revenue from Tesla. And of course they DO .. any potential autopilot accident by a Tesla is headline news. But, again, after the hysteria has died down, it mostly turns out NOT to be AP/FSD (for example, in the model S that hit a tree at high speed).