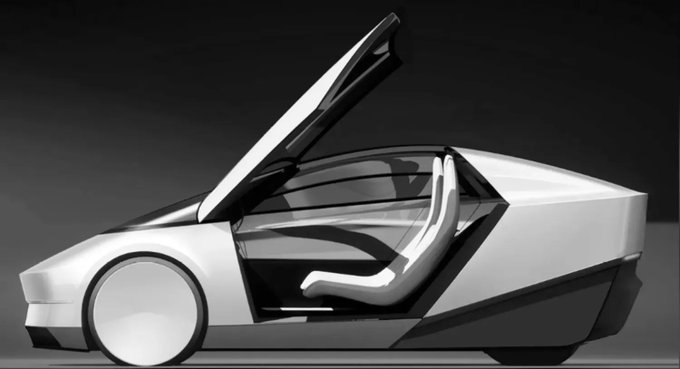

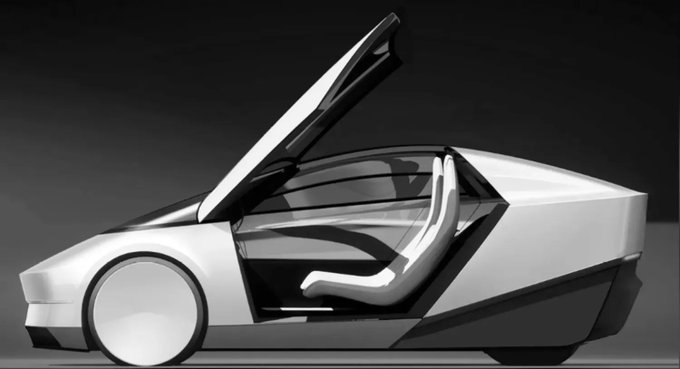

So Elon says that Tesla will reveal a dedicated robotaxi vehicle on 8/8. What do you think we will see? Will it look like this concept art or something else?

I will say that while this concept drawing looks super cool, I am a bit skeptical if it is practical as a robotaxi. It looks to only have 2 seats which would be fine for 1-2 people who need a ride but would not work for more than 2 people. I feel like that would limit the robotaxis value for a lot of people. Also, it would likely need a steering wheel and pedals for regulatory reasons even if Tesla did achieve eyes-off capability.

So I think this is concept art for a hypothetical 2 seater, cheap Tesla, not a robotaxi.

Could the robotaxi look more like this concept art but smaller? It could look a bit more like say the Zoox vehicle or the Cruise Origin, more futuristic box like shape IMO and seat 5-6 people.

Or maybe the robotaxi will look more like the "model 2" concept:

Other questions:

- Will the robotaxis be available to own by individuals as a personal car or will it strictly be owned by Tesla and only used in a ride-hailing network?

- What will cost be?

- Will it have upgraded hardware? Radar? Lidar? additional compute?

- Will Elon reveal any details on how the ride-hailing network will work?

Thoughts? Let the fun speculation begin!

I will say that while this concept drawing looks super cool, I am a bit skeptical if it is practical as a robotaxi. It looks to only have 2 seats which would be fine for 1-2 people who need a ride but would not work for more than 2 people. I feel like that would limit the robotaxis value for a lot of people. Also, it would likely need a steering wheel and pedals for regulatory reasons even if Tesla did achieve eyes-off capability.

So I think this is concept art for a hypothetical 2 seater, cheap Tesla, not a robotaxi.

Could the robotaxi look more like this concept art but smaller? It could look a bit more like say the Zoox vehicle or the Cruise Origin, more futuristic box like shape IMO and seat 5-6 people.

Or maybe the robotaxi will look more like the "model 2" concept:

Other questions:

- Will the robotaxis be available to own by individuals as a personal car or will it strictly be owned by Tesla and only used in a ride-hailing network?

- What will cost be?

- Will it have upgraded hardware? Radar? Lidar? additional compute?

- Will Elon reveal any details on how the ride-hailing network will work?

Thoughts? Let the fun speculation begin!