Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

MarcusMaximus

Active Member

I thought AP2 s/x didn't have dedicated rain sensors and that Tesla is going to have the cameras do that instead. If they do, then I don't know why auto wipers are not activated yet.

I don't think it's fully known what that sensor is or what it's used for.

The charge screens don't base their range gain estimates on your driving average. They use the same static # the rated range estimator does.They may have been doing high-speed high high-acceleration runs prior which would explain the high consumption. That also may have heated the battery which would explain the slow charge rate. These are mules so they're going to be out stressing them to try and get them to break, not tiptoeing through the tulips.

I think the earlier suggestion that the car was getting far less than 70KW initially due to stall pairing is more likely.

I don't think that's how it would work. It probably would have to do with distortion of the image that occurs when water gets on the glass.I doubt rain sensing will ever work with the camera since the focus is probably in the distance. I don't think the camera will be able to count raindrops very easily.

whitex

Well-Known Member

The tiny sensor on AP2 people think is a rain sensor is kind of small for sending rain (raindrops may not fall on it), but most importantly, if it really was such a sensor, why on earth would Tesla not deliver this functionality in the last 8+ months? Unless of course the sensor is usless, or Tesla cannot keep get their developers to get it done somehow (if it is a dedicated rain sensor, how difficult would it be? And if they cannot get that done in 8 months, how on earth are they going to do FSD in the next year or even two????).

We have both AP1 and AP2 car, AP2 car light sensing sucks (lights turn on even when bright sunny weather) and rain sensing does not exist at all. AP1 lights work well, rain sensing not perfect but it's there.

We have both AP1 and AP2 car, AP2 car light sensing sucks (lights turn on even when bright sunny weather) and rain sensing does not exist at all. AP1 lights work well, rain sensing not perfect but it's there.

3Victoria

Active Member

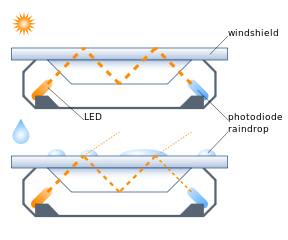

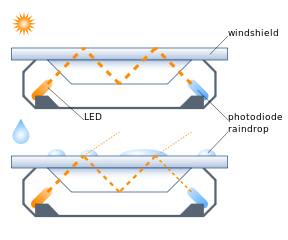

This article Rain sensor - Wikipedia shows a picture of a rain sensor:

And explains they work by reflecting infrared off the glass/evironment interface:

It seems to me that doing rain sensing with a camera is difficult because of focal length. Perhaps detecting distortion as the view changes due to motion might be detectable?

And explains they work by reflecting infrared off the glass/evironment interface:

It seems to me that doing rain sensing with a camera is difficult because of focal length. Perhaps detecting distortion as the view changes due to motion might be detectable?

Last edited:

whitex

Well-Known Member

Exactly, if you ever worn glasses in the rain, you will know that it's not hard to not see droplets on the glasses. Unfortunately Elon doesn't wear glasses or live somewhere where it rains much, hence we are where we are with no rain sensor on AP2.It seems to me that doing rain sensing with a camera is difficult because of focal length. Perhaps detecting distortion as the view changes due to motion might be detectable?

3Victoria

Active Member

Sounds like a job for a summer intern.Exactly, if you ever worn glasses in the rain, you will know that it's not hard to not see droplets on the glasses. Unfortunately Elon doesn't wear glasses or live somewhere where it rains much, hence we are where we are with no rain sensor on AP2.

I know this is getting slightly tangential, but I do have this behavior with my pre-AP S85 (early 2013 flavor)Have you noticed the headlights come on after running the wipers for AP2 cars?

That doesn't happen with our pre-AP S P85.

That is: if the wipers trigger (either from rain sensor or manually initiated), the headlights do automatically come on when in "Auto" mode.

I don't think that's how it would work. It probably would have to do with distortion of the image that occurs when water gets on the glass.

I'm not photographer or AI vision expert, but I think lense flare can be detected

That is: if the wipers trigger (either from rain sensor or manually initiated), the headlights do automatically come on when in "Auto" mode.

In PA if you have your wipers on you're required by law to have your headlights on. Not sure how many other states have this law. Details

electracity

Active Member

I believe this thread wins the “Conjecturing based upon some photos and incomplete information” award

I agree. Using actual photos is going too far. We don't want to get all fancy here.

whitex

Well-Known Member

Even if it could be done (remember in this case you need to detect rain on the windshield, not rain falling in the background or the fact that you are driving towards a water fountain), at what cost in terms of processing power? I think it's possible that whoever made the decision to replace the rain sensor with the cameras did not do a full cost-benefit analysis. Save $x on an infrared sensor, in exchange for how much processing power in terms of computer vision processing?I'm not photographer or AI vision expert, but I think lense flare can be detected

View attachment 232437

MarcusMaximus

Active Member

Even if it could be done (remember in this case you need to detect rain on the windshield, not rain falling in the background or the fact that you are driving towards a water fountain), at what cost in terms of processing power? I think it's possible that whoever made the decision to replace the rain sensor with the cameras did not do a full cost-benefit analysis. Save $x on an infrared sensor, in exchange for how much processing power in terms of computer vision processing?

Most likely the ability to detect such distortion is also useful in driving, meaning that during training a network would likely learn to identify it anyway(and forcing it to may actually improve learning generally). It's not uncommon to have a network perform double duty on closely related tasks, as the combination can do better on both tasks than two networks would be able to be at doing each one independently.

whitex

Well-Known Member

So the reason we don't have it yet is simply it hasn't rained in California much since AP2 introduction? The network is up and running, learning, yet no auto wipers and even auto-headlights on/off sucks - I recently noticed our AP2 car turns lights on even in perfectly sunny weather (it was after 6pm so maybe Tesla hard-coded when when the lights should go on, but haven't taken into consideration that sun goes down much later in the summer).Most likely the ability to detect such distortion is also useful in driving, meaning that during training a network would likely learn to identify it anyway(and forcing it to may actually improve learning generally). It's not uncommon to have a network perform double duty on closely related tasks, as the combination can do better on both tasks than two networks would be able to be at doing each one independently.

Last edited:

Similar threads

- Replies

- 8

- Views

- 3K

- Replies

- 4

- Views

- 198