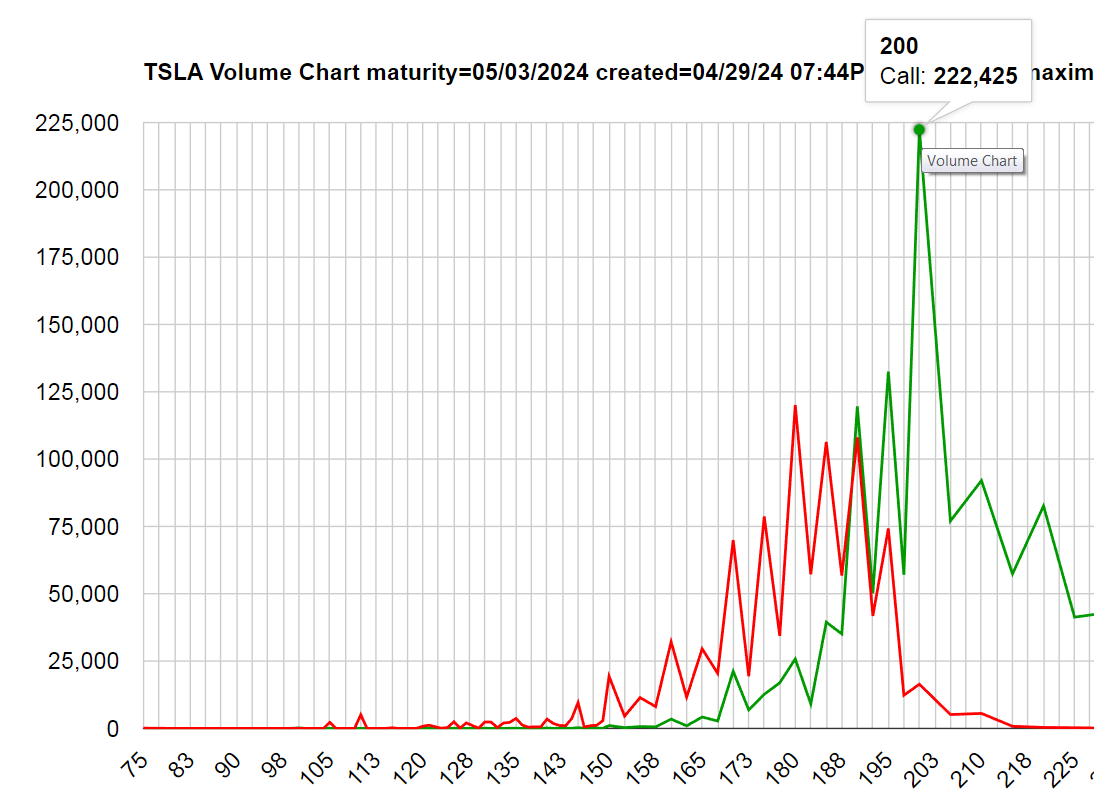

FYI, this is a common theme as I explore Max Pain for future weeks. Lots of Call trading at the 200 mark, but not much sticking for the Open Interest.

Quite the dance for a single day.

Quite the dance for a single day.

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

I agree. The best way to handle this type of turn is to not do it. Go right to a stop light and make a left or U-turn or otherwise replan your trip. For years UPS has planned left turn out of routes as much as possible. It would be entirely reasonable for FSD to handle this very dangerous left turn situation by rerouting. It takes a little longer but is worth it.

Seems likely to me that Tesla Robotaxis update Baidu maps, Baidu Robtotaxis benefit from those updates. And Tesla benefits from the existing maps and any updates done by Baidu.With Baidu struggling to improve it’s own Robotaxi operations, there are obvious mutual interests.

I was under the impression volume is somewhat live but open interest doesn’t update until the following day. [they can’t make it too easy for us.FYI, this is a common theme as I explore Max Pain for future weeks. Lots of Call trading at the 200 mark, but not much sticking for the Open Interest.

Quite the dance for a single day.

View attachment 1042960

Tesla using Baidu Maps should mean that all Tesla vehicles including Robotaxis improve Baidu Maps as they drive around.One response from Chinese Owner. I find it interesting. Can anybody from here comment on this?

Boon

@booncooll

As a Chinese and a modelY car owner, Baidu is not a good choice. Amap is more trustworthy. Many Chinese Tesla owners need to install a mobile phone holder for their Tesla because they are not used to using Baidu Maps. Let’s use the Amap Map APP in the mobile APP. Amap Map is more accurate and easier to use than Baidu Map. But whether to use Amap or Baidu Map, ELON seems to have no choice.

Oh no!

There is a difference between annual production and deliveries and fleet size.2m cars x 100$ x 12 x 10 x 0.3 = 7.2B / year in revenue.

For voting? What's the urgency? It will be open for some time.If you are with Schwab and are into wearing out your F5 key:

H/T: @TeslaBoomerMama on X

It does do worse in the beginning. For instance; bringing my daughter to school - when going into the grounds the car needs to turn right followed by an immediate left turn. The first couple of days it could not do it. Now it nails it every time.Does it really perform worse, or do you just get used to the new release?

That's a hard thing to justify really. If we're being really optimistic and assume a 30% take rate for FSD, average vehicle lifetime of 10 years, it means you get:

2m cars x 100$ x 12 x 10 x 0.3 = 7.2B / year in revenue. Triple the volume (a pipe dream with current factories and models) and you get only 22B in revenue. Factor in a software gross margin of 80% and that's only 16B in bottom line.

I have a quick question for the TA people.

Does today's rise mean there is a gap that is expected to fill?

TIA

edit for clarity

Interesting question. People have been batting this question back and forth for years on the FSD threads.Does it really perform worse, or do you just get used to the new release?

Which brings us into the Debate. In no particular order, but possibly in terms of popularity, we have the following hypotheses:

- It's The Map Data. People hypothesize that either at the start of a NAV-based trip, but possibly picked up dynamically so long as there's some kind of internet connection, Map Data, possibly crowd-sourced (based on interventions?) gets downloaded to the car, telling it to Watch It on certain intersections. This has been pointed out to explain poor initial behavior at stop signs, various kinds of merges, and strange intersections with multiple conflicting traffic signals. Right turn on red yes or no? Could be the Map Data.

[*]It's The Hidden Transfer Data. With FSD one is supposed to have diagnostic data being sent to the Mother Ship; certain people with more expertise than I have monitored the amount of data going back (and somewhat, forth). Typical uploads (it's mainly uploads) are measured in 100's of MB; some have reported single GB numbers. Now, this is commonly supposed to be videos and such. But there's no particular reason that this might not have "correction" data, map or otherwise.

[*]It's NN Learning Stuff. This is pretty much my horse, but I get yelled at all the time by people who say, "The binaries are signed with checksums and can't change, EVER!!!, until the next point release." Um. My CS chops say, "There's Always A Work Around.", and that work-around, if present, would presumably allow "error correction" to move various "fine control" sliders around, be it NN weights or other algorithmic stuff.

Greentheonly said:they must update entire firmware at least on the autopilot. Can't change a single file - breaks dm-verity. Can't overlay and have it survive a reboot (no dev overlay hooks in prod firmwares)

And, just to make things worse: Whatever the heck is going on under the hood on a Tesla running FSD, it's absolutely clear that there's a definite probabilistic component to the car's behavior because what seems to be different behavior in identical circumstances might just be some minor deterministic differences in what the car drove through in the last ten seconds.. or last ten minutes.. or the last ten days.

Thanks for your thoughts on FSDInteresting question. People have been batting this question back and forth for years on the FSD threads.

Here's an example: Near where I live, to get on US1 (yeah, that US1) one has to take an on-ramp. When getting on US1, one has maybe 100 yards (maybe less) to merge to the left to get into the slowest of three travel lanes on US1. If one misses that merge to the left and continues straight ahead on the on-ramp, one swiftly finds oneself on an off-ramp that takes one onto I-287 which will take one miles out of one's way.

I take this ramp onto US1 a lot. First time with 12.3.3, and again on 12.3.4, had to disengage, then go across various painted lines to get to the left onto US1; that is, the car didn't merge over fast enough, either due to traffic, but mostly because it has a more genteel approach to shifting to the left, keeping an eye out for All That Traffic on three lanes of US1.

2nd, 3rd, and Nth merges: The car starts signalling earlier and gets over, although it's still slower than a human, and it kinda crosses white solid lines a bit with the right side of the car before getting over.

On 11.4.9: It would make it one out of three tries. On 12.x, it's made it every time, except for the first for a release.

It's not just me: Others have noted this behavior. Not necessarily at some on/off ramp or other, but at some "difficult" spot in the road, be it speed, stop signs, or just funny intersections.

Which brings us into the Debate. In no particular order, but possibly in terms of popularity, we have the following hypotheses:

In the meantime, Official Tesla has said nothing about any of the above, or even hinted that Any Of The Above might be happening. Or not. And there's folks who disbelieve that any of the above are true or even possible.

- It's The Map Data. People hypothesize that either at the start of a NAV-based trip, but possibly picked up dynamically so long as there's some kind of internet connection, Map Data, possibly crowd-sourced (based on interventions?) gets downloaded to the car, telling it to Watch It on certain intersections. This has been pointed out to explain poor initial behavior at stop signs, various kinds of merges, and strange intersections with multiple conflicting traffic signals. Right turn on red yes or no? Could be the Map Data.

- It's The Hidden Transfer Data. With FSD one is supposed to have diagnostic data being sent to the Mother Ship; certain people with more expertise than I have monitored the amount of data going back (and somewhat, forth). Typical uploads (it's mainly uploads) are measured in 100's of MB; some have reported single GB numbers. Now, this is commonly supposed to be videos and such. But there's no particular reason that this might not have "correction" data, map or otherwise.

- It's NN Learning Stuff. This is pretty much my horse, but I get yelled at all the time by people who say, "The binaries are signed with checksums and can't change, EVER!!!, until the next point release." Um. My CS chops say, "There's Always A Work Around.", and that work-around, if present, would presumably allow "error correction" to move various "fine control" sliders around, be it NN weights or other algorithmic stuff.

But people have repetitively noted horrible behavior after a new release that has been cured by

Most of the above may be related to the common human method of tangling with obtuse systems by killing a chicken and studying the internal organs afterwards. Or just superstition. Or getting "Used to it", as you point out.

- Double-scroll-wheel reset

- Powering down the car for a time

- Waiting a day or whatever

- Calibrating the cameras (extreme cases)

- More recently, cleaning the glass under the cameras on the front windshield

And, just to make things worse: Whatever the heck is going on under the hood on a Tesla running FSD, it's absolutely clear that there's a definite probabilistic component to the car's behavior because what seems to be different behavior in identical circumstances might just be some minor deterministic differences in what the car drove through in the last ten seconds.. or last ten minutes.. or the last ten days.

In other words, the car acts a bit like, say, a bunch of civilians standing on the goal line on a football field. Somebody yells, "Listen up! Everybody go to the other goal line!" Some people run, some people walk fast, some walk slow, but they all get there. Chaotic, probabilistic times for each civilian to the other goal line. FSD.. is a bit like that.

But you see, if a car cannot solve the unprotected left with 65mph cross traffic over 6 lanes with a blocked view, it cannot solve robotaxiI stopped watching his videos when I realized it is far faster to go to the light and turn left with all the other cars seen in Google street view instead of trying to be quicker but more dangerous even as a human driver. It's a whopping .2 miles. During rush hour probably actually faster than waiting, definitely safer.View attachment 1042962

But you see, if a car cannot solve the unprotected left with 65mph cross traffic over 6 lanes with a blocked view, it cannot solve robotaxi

-said by no other robotaxi engineers from Waymo, Cruise, or anyone for that matter.