UnknownSoldier

Unknown Member

Odds on the media reporting on this now that it's over, as opposed to the coverage when it started?One less thing to deal with…

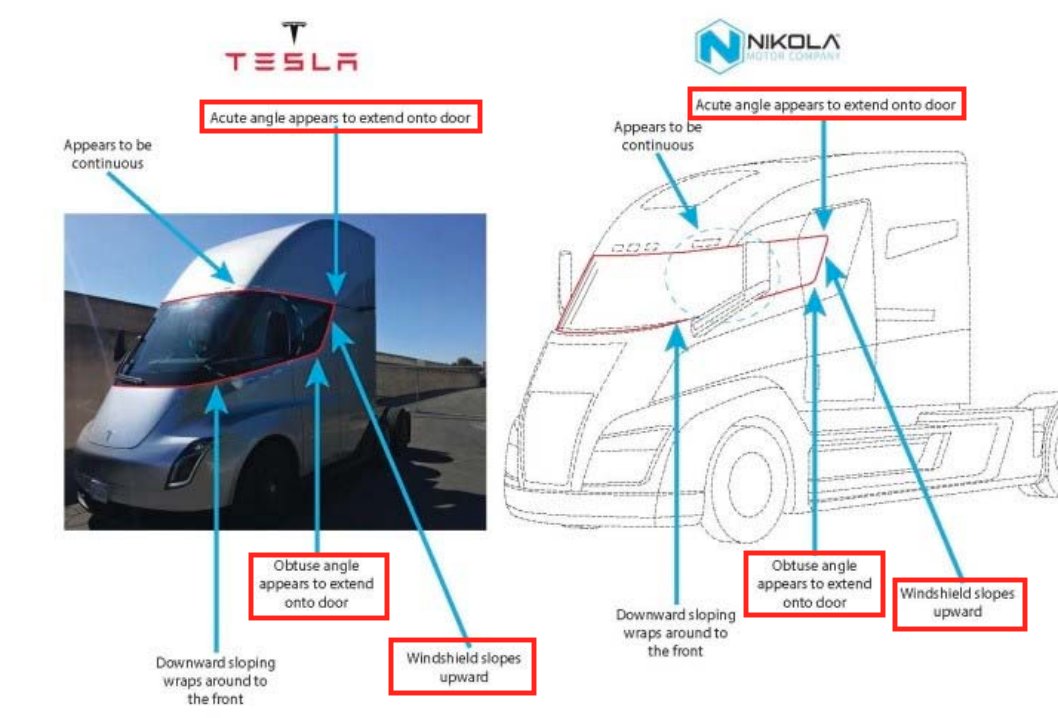

Nikola drops $2 billion patent infringement lawsuit against Tesla

After recently settling with the US Securities and Exchange Commission (SEC) and agreeing to pay $125 million over federal security law violations, Nikola Corp. has dropped its $2 billion patent infringement lawsuit against Tesla. According […]driveteslacanada.ca