stopcrazypp

Well-Known Member

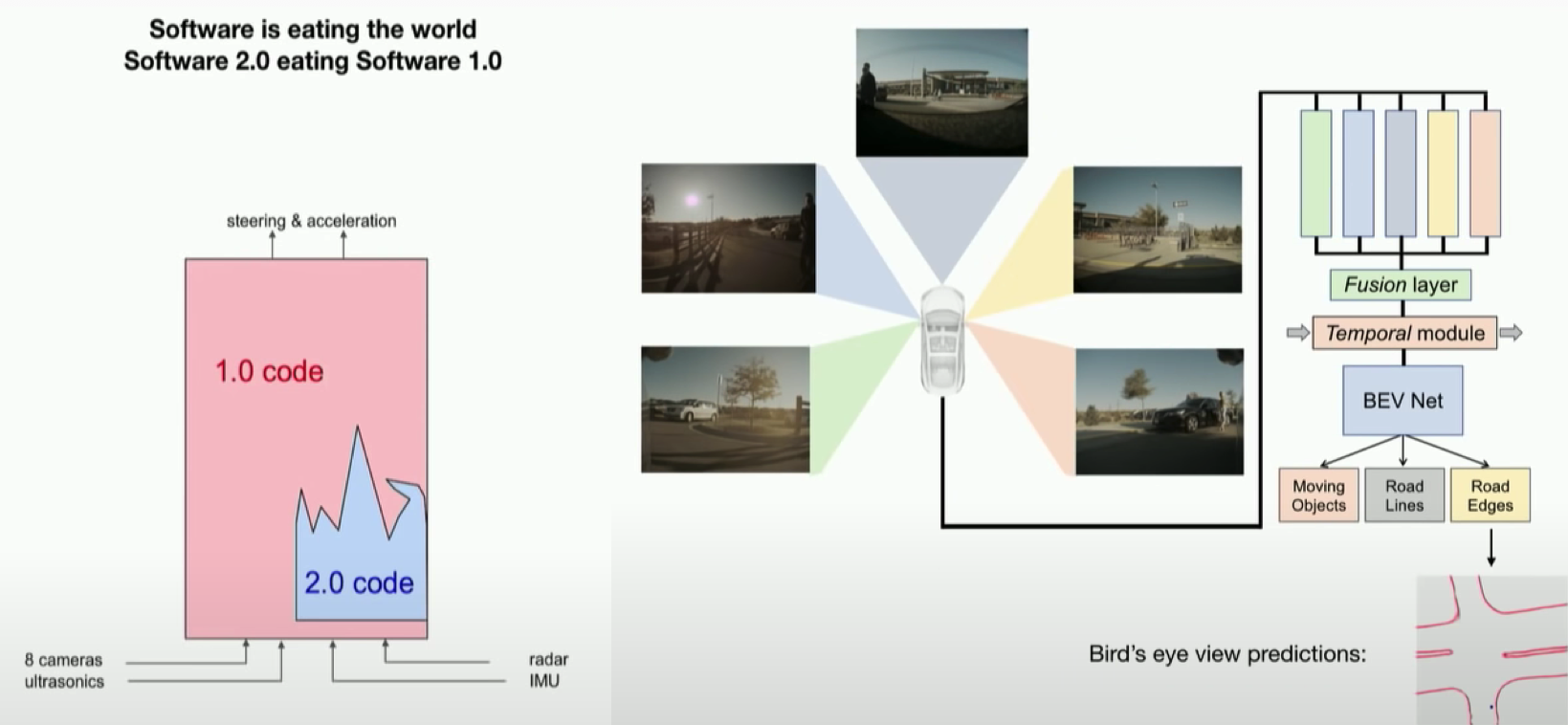

The NN is still working only with a frame at a time in your example. Anyways, instead of me paraphrasing, here is what Elon have said about the distinction between what they are doing with their latest software vs what they have been doing previously:Everyone has their opinion on how they think AP works, but what I've told you are facts regardless of opinion. My background is actually in computer vision and have been working in dedicated computer vision projects since 2016 and have personal friends that are part of the AP team at Tesla. Convolutional neural networks take in frames, you can create a pipeline that starts with stitched frames (e.g. video) and have that be parsed out on the fly and it makes it seem like you are working with "video" only. The "training" that occurs is a supervised approach which annotators look over parsed video frames and label objects of interest. The label process creates an XML file with the coordinate points of what you labeled and the classification. This is what then goes into training and why this is a supervised approach. On inference (e.g. when you are driving your car on autopilot), the video is read in, parsed into frames, ran through the layers of convolution neural network and inferences on object coordinates made. Fast forward this to 20FPS of processing through the GPU and you have live "video" inferencing.

"The actual major milestone that is happening right now is really transition of the autonomy systems of cars, like AI, if you will, from thinking about things like 2.5D, things like isolated pictures… and transitioning to 4D, which is videos essentially. That architectural change, which has been underway for some time… really matters for full self-driving. It’s hard to convey how much better a fully 4D system would work. This is fundamental, the car will seem to have a giant improvement. Probably not later than this year, it will be able to do traffic lights, stops, turns, everything."

Tesla Video Data Processing Supercomputer ‘Dojo’ – Building a 4D System Aiming at L5 Self-Driving | Synced

The important distinction is the NN now takes into account previous frames when analyzing the current frame (as well as likely factoring in the time in between frames). That's why it's more like "video" (where typically the compression methods take in certain frames as key frames, then interpret the differences between frames). While the previous approach Elon describes as "2.5D" is more like a series of images that are treated in isolation.

Last edited: