Try some of you blowup dolls. You have so to many to try so one may work.It still works however, been busted a few times with the red warning

.

Why does it have to yell at me every time I get in the backseat to get some shut eye?

Welcome to Tesla Motors Club

Discuss Tesla's Model S, Model 3, Model X, Model Y, Cybertruck, Roadster and More.

Register

Install the app

How to install the app on iOS

You can install our site as a web app on your iOS device by utilizing the Add to Home Screen feature in Safari. Please see this thread for more details on this.

Note: This feature may not be available in some browsers.

-

Want to remove ads? Register an account and login to see fewer ads, and become a Supporting Member to remove almost all ads.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FSD v12.x (end to end AI)

- Thread starter Buckminster

- Start date

-

- Tags

- #fixphantombraking autopilot chill is not chill elon is an ass fix fsd extreme late deceleration fsd does not commit to go fsd does not maintain proper speed fsd hard and late braking fsd has decision wobble fsd jerky driving fsd late deceleration fsd sometimes may not detect train crossings fsd stops too early too quickly fsd stops too far behind line fsd tailgating in city streets

I do about half my driving very late at night (and FSD performs wonderfully with little to no traffic at that time). I have a late '21 MY and I didn't pick up any evidence of the IR LEDs when I tried the phone camera method.Last night I drove home at night and I got nags like every minute, until I started keeping steady pressure on the wheel. Is my infrared broken?

However, I've found that as long as I'm looking out at the road and I keep just a light touch on the wheel, FSDs 12 3.6 nags me very little, and I hardly ever have to apply any notable steering torque - day or night. This is definitely an improvement over 11.x, where I had to keep a bit of torque on the wheel all the time.

On some of these night drives, I get several beep notifications with messages about possibly blocked cameras, due to the lack of external lighting. But very rarely a pay-attention nag.

I should also say mine is not highway driving, but mostly suburban median-divided roads. If your report involved behavior on the highway, that could be more a characteristic of the older FSD stack.

I suspect I'm missing something, but isn't Navigate on Autopilot being used in that photo, rather than FSD?Has anyone had a mapped route where FSD turns onto a state road, goes .1 tenth of mile and simply stops and stays in the middle of the lane where the route indicates a U-turn? Then displays a message the maneuver isn't supported? The car won't do anything but block the lane. Been doing this for 2 1/2 years.

View attachment 1048615

Max Spaghetti

Member

There used to be a road I used regularly where according to the nav you couldn't do any turn that crossed the opposing traffic - it was a 4 lane road with just a painted line in the middle and even had extra turn lanes. The nav would do what it is doing in your description. Apple and Google mapping never had that problem.Has anyone had a mapped route where FSD turns onto a state road, goes .1 tenth of mile and simply stops and stays in the middle of the lane where the route indicates a U-turn? Then displays a message the maneuver isn't supported? The car won't do anything but block the lane. Been doing this for 2 1/2 years.

So amongst others, I went to the Tomtom website and discovered Tomtom thought it was a divided road with an uncrossable median at many side streets - so I figured that maybe incorrect Tomtom map data was making its way to Tesla via Mapbox or something like that.

I submitted a map correction request online with Tomtom (which to their credit, they fixed within a day or two). The problem was solved in the next Nav Database update in the Tesla (about 6 months later).

I don't know if it's coincidence, and Tesla/Mapbox get their nav data from "a variety of sources", but in my case it seems it was Tomtom's error.

sleepydoc

Well-Known Member

Perhaps, but as I said, it’s been pervasive across all sorts of situations, including no traffic at all. The interesting thing is it occurs on the highway which is supposedly on the v11 stack yet there has been a clear change in behavior.Maybe it is encountering traffic patterns it has not been trained for?

Exactly, not sure why this “bug” is harder to understand than others. It’s happened to me several times on this trip - other vehicles were not a factor or certainly shouldn’t have been and haven’t been in the past. Just like my intermittent dashcam issue, was somehow introduced in the last update. I’ve never had my car refuse to move to the RH lane if I fully moved the stalk in the attempt, especially on the wide open western highways I’ve been traveling.Perhaps, but as I said, it’s been pervasive across all sorts of situations, including no traffic at all. The interesting thing is it occurs on the highway which is supposedly on the v11 stack yet there has been a clear change in behavior.

AlanSubie4Life

Efficiency Obsessed Member

This is all so boring now.

Predictions for 12.4:

1) Won't be better at stopping in any appreciable way. It might stop at the stop line, I guess. Gonna guess Stutterfest and Slamarama will persist.

2) Won't pass Chuck's ULT.

3) Will read speed limit signs better.

4) Speed control will be improved, somehow.

5) Won't curb wheels as much, I expect big and welcome improvements there, tired of disengaging on most corners due to lack of margin and incorrect line (even if 95% of the time it would not have hit).

6) There will be some improvements in lane selection and wandering. But it will still cause a lot of problems.

7) I expect 50%-100% more miles between disengagements/interventions. So maybe a couple miles.

I'll shoot for a success rate on predictions higher than success rate on CULT.

Predictions for 12.4:

1) Won't be better at stopping in any appreciable way. It might stop at the stop line, I guess. Gonna guess Stutterfest and Slamarama will persist.

2) Won't pass Chuck's ULT.

3) Will read speed limit signs better.

4) Speed control will be improved, somehow.

5) Won't curb wheels as much, I expect big and welcome improvements there, tired of disengaging on most corners due to lack of margin and incorrect line (even if 95% of the time it would not have hit).

6) There will be some improvements in lane selection and wandering. But it will still cause a lot of problems.

7) I expect 50%-100% more miles between disengagements/interventions. So maybe a couple miles.

I'll shoot for a success rate on predictions higher than success rate on CULT.

OK, I see the LEDs on my phone camera. So I don't know why it's not detecting me looking straight ahead. Maybe my eyes are too dark, or my lids too droopy, or it's because I'm sitting too far back, or something to do with the lighting on the freeway.Take your cellphone out to your car, turn on your camera and look to the left and right of the cabin camera. There are 2 infrared lights in some cars you can only see with a phone. You don't need to take a picture or video.

Ben W

Chess Grandmaster (Supervised)

I've never gotten used to the on-screen side-view mirror feeds when the blinkers are on, though this is mostly because the car is moving and it's safer to keep eyes on the road. But bumper-cam views in non-moving blocked-visibility situations may be useful enough to overcome this. The canonical use case is e.g. pulling from a parking lot onto a fast busy street where the left-right views are blocked by large parked cars or vans. This is a tricky situation for humans too, and I'd love to know what a bumper-cam feed would feel like in this situation.Another option might be to make the additional cameras viewable, though it might be difficult for the driver to manage the additional information in real time. The visualization can combine all of the cameras into one easy to read image, but I think the reliability of the visualization is still up for debate. One thing the visualization lacks is the ability to indicate parts of the road that it cannot actually see. For example, let's say there is a car approaching on the road you are trying to turn onto, but the cameras cannot see that part of the road (maybe a parked car is in the way). The visualization should leave that part of the road undrawn (as in black/blank) rather than render it as if there are no cars/objects present there.

The current visualization does "fuzz out" in areas it can't perceive; it generally won't show an empty road if it doesn't know it's empty. (At least that's been my experience; granted it still has some flicker/instability, especially at long range, and is far from perfect at detecting everything.)

Yes, but disengagement clips represent the car making a mistake and thus will never be used directly for training. They are only used indirectly; to inform Tesla what the prominent failure modes are, so they can gather more clips of good human driving in those situations (or synthesize such clips) to use for training.Do you think disengaging and/or sending a feedback report has any effect on whether a clip is submitted?

I'm sure they are pre-screened by algorithm, but even 2 million short clips is few enough that I'm pretty sure they're individually human-reviewed. The consequences of a bad example (or a few) slipping through and becoming training examples could cause outsize problems with the neural network, and potentially legal problems too. Imagine if a clip of a car running over a pet makes it into the training set, and later someone sues Tesla when a car on FSD runs over their pet, and then the bad training video comes out in litigation!Is it safe to assume that any clip making it into the NN was carefully reviewed by hand, or are some of them passed in by an algorithm? I think Elon said they rebuilt the NN for either 12.4 or 12.5 from scratch. If there are 2 million clips comprising the NN, I wonder how quickly they could validate all of them by hand. But maybe he meant the model was rebuilt, not the training set.

Edit: I understand 2 million clips have been used across various versions of the NN, but the number of clips in any one build of the NN might be less.

Ben W

Chess Grandmaster (Supervised)

A heavy abstraction cost is usually incurred when the underlying hardware instruction set has to be emulated, but I doubt this is the case for HW4 vs HW3. More likely HW4 can natively run all the HW3 code and models, perhaps with stalls added to simulate HW3 latency, leaving much of its compute capacity unused. (And as mentioned, down-rezzing for the cameras, though this is cheap.)You mean just because the definitions you cited didn't address there being a performance cost? There's always a cost, just depends how extensive the emulation is. I don't know anything about the architectures involved, I'm just saying it's possible HW4 emulating HW3 could run slower than HW3. The opposite is also possible. The word emulation still implies an added layer of abstraction, which usually costs ya.

Ben W

Chess Grandmaster (Supervised)

I Want My Mommy Average Balls to the Wall.I vote to change the settings from Chill Average Assertive to Timid Average Audacious.

Ben W

Chess Grandmaster (Supervised)

I dont think both stacks are ever running simultaneously, except maybe briefly during handoffs. But in general, one stack should not slow down the other.Remember, 12.3.6 is running with software that has 2 stacks. One for city and then V11 for highway, which is filled with the old c++ code. When they merge the highway stack and have full e2e software, the space for the software will open up and compute speed should increase, therfore reducing lag and hopefully better performance.

The question is whether the eventual combined E2E network (v12.5) may have to be larger or have a different architecture than the current city-streets-only E2E network (v12.3 / 12.4). If e.g. more NN layers are required in order to handle the added informational complexity of processing both city streets and highways, the network may become slightly slower than v12.4, even though the v11 network is gone.

My guess is that the E2E network (at least at first) will become wider but not longer, so its overall latency should stay about the same. But soon after v12.5 (perhaps v13), I expect we will begin to see a divergence between HW3 and HW4, as the number of edge cases Tesla is trying to cram into the network starts to overflow the compute capacity of HW3. E.g. they may find that a HW3-sized network can achieve 1 safety disengagement per 1000 miles, but a HW4-sized network (trained on exactly the same data!) can achieve 1 disengagement per 10000 miles. At that point we'll be well on our way into the march of 9's.

Ben W

Chess Grandmaster (Supervised)

Not per se, but it still does a pretty terrible job with U-turns. There's a spot in my area where the Nav always suggests the U-turn, and FSD always blows straight past it and reroutes. And another where FSD slows to a complete stop in traffic before making a left turn into a protected median. (It should emphatically not stop!) And another similar case when making a left turn, where the protected median has a stop sign, and FSD blows straight through the stop sign. (Although the last time I tried this the car stopped correctly, so hopefully this one has been fixed.) Look forward to testing out v12.4 in these spots when it's released!Has anyone had a mapped route where FSD turns onto a state road, goes .1 tenth of mile and simply stops and stays in the middle of the lane where the route indicates a U-turn? Then displays a message the maneuver isn't supported? The car won't do anything but block the lane. Been doing this for 2 1/2 years.

Ben W

Chess Grandmaster (Supervised)

If the FSD network is this sensitive to resampling noise, that's a very bad sign, given how robust to all sorts of other environmental noise it needs to be. (Dust, dirt, rain, condensation, pigeons, etc.) My guess is that the downsampled HW4 images will generally be far higher quality than native HW3 images, and this should more than make up for any subtle resampling noise.If all that’s being done is a resolution shift it shouldn’t matter much. The difference in processing power between HW3 and HW4 should more than make up for the transformation. My question would be whether the images are actually equivalent. Often times resolution shifts introduce some noise and that may affect the system performance.

Ben W

Chess Grandmaster (Supervised)

Imperial dozens or metric dozens?He mentions the car had "dozens of tiny cameras."

[Edit] Ah. The royal 'dozens'.

Last edited:

stopcrazypp

Well-Known Member

Yes, if Tesla uses a proper downsampling function it's possible even at a lower final resolution, the image is still much higher quality. They may simply be using the built-in sensor binning however (as they appear to have done for the side cameras when using dashcam) in which case you lose that advantage.Regarding the video quality, I think it's more likely that HW4 video output downrezzed (aka down-sampled aka decimated) to HW3 will be of somewhat higher quality. I haven't seen any direct comparisons but:

- There are theoretical and practical benefits from the original image being taken at a higher resolution i.e. spatial sampling frequency. I wouldn't put too much on that without testing and knowing more about the level of anti-aliasing measures taken in both hardware setups.

If you own a camera that does 4K you can easily see this. Many such cameras when put in 1080p mode uses binning, which results in an image that while matches that of a native 1080p camera, isn't that high quality. If you instead record in 4K and downsample it in software later, it results in a much higher quality 1080p image. Or if the camera's ISP does proper downsampling, that also would result in higher quality.

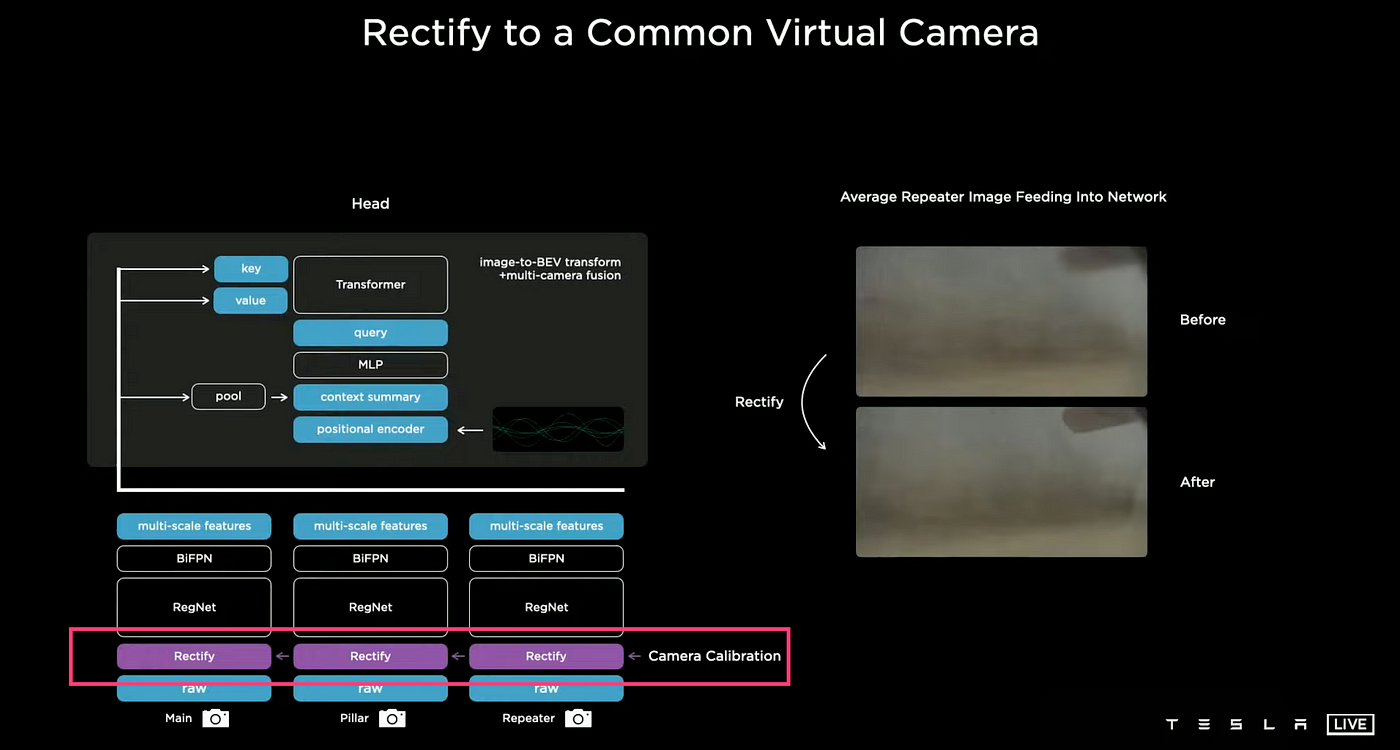

Tesla may no longer use this after switching to E2E (or even when they talked about switching to 10-bit RAW), but before, they had a camera rectification function that served to normalize the cameras (since even with the same model of camera, the lenses wouldn't be exactly the same, the mounting positions won't be too). They may be using a similar function for HW4 vs HW3.

- Aside from pixel resolution, I believe the new cameras can operate at a higher frame rate. I don't know if they are simply being run at the HW3 rate now, or if the video is being processed to normalize the rate.

- Perhaps the larger effect, in the Tesla HW3-HW4 case, is that the newer-generation cameras supposedly do a better job of suppressing sensor overload artifacts from bright sun in the frame. It's also likely that the low-light performance and noise floor is superior.

Tesla Full Self-Driving Technical Deep Dive

I detailed the HW3 vs HW4 computing differences here. In terms of the actual FSD hardware (and not the UI stuff handed by Ryzen):I agree that the video down-sampling is not the whole story regarding Elon's comment. Aside from the question of video input quality, I think he means that clips from both HW3 and HW4 are used to train the same model, and then both in-car computers are running the same inference model. Not taking any real advantage of the more powerful computer in the HW4 vehicles.

While in general, there can be a loss of performance in having one computer type run another's native code using an emulation layer, I don't think that itself is a significant problem here. I would be shocked if the HW4 computer had not been engineered to run HW3 code directly at full performance; I think it was essentially a mandatory design requirement for the rollout of functional HW4 vehicles in 2023. Perhaps "compatibility mode" would have been a clearer term.

So I don't think the "emulation" represents a loss of quality in video or in compute - more likely some modest actual gain in the former. Elon's comment should mostly be taken to mean that HW4 hasn't achieved its full potential, because they haven't yet deployed a version customized for it.

The CPUs went from 12 cores (3x4) 2.2GHz to 20 cores (5x4) 2.37 GHz, using the same Cortex-A72 cores with a moderate speed bump.

The NPU went from 2 NPUs to 3 NPUs.

Discussion: HW.4 Suite - Availability, retrofit, suitability etc.

There is basically no compatibility mode needed nor any sort of emulation layer needed. It's not like they actually changed architecture. A dumb way for HW4 to simulate HW3 is simply to shut down the extra cores and throttle the CPUs back down to the original.

The biggest differences remain in the cameras.

Last edited:

David Wang

Member

I've riden Hwy 17 from Scott's Valley to Los Gatos every day on a motorcycle for work in the 90s. Very fun on a bike. I can imagine how FSDS makes it a piece-o-cake. My commute was just exciting.

Back in the 90s my gf, now wife, used to live in Aptos and I lived in San Jose. I would take hwy 17 to visit her every weekend. Never got used to the drive and it was always a stressful experience. Now it's a piece of cake w fsd.

But in fact you DONT want masses of camera resolution. You need as much resolution as required to get the job done properly and then NO MORE. Any more just wastes vast amounts of NN/CPUI power to generate the same result. Given where camera tech is today, Tesla probably chose the cheapest option, which is almost certainly far higher than needed. More important than resolution is dynamic range and processing lag in the camera. There is also the extra overhead of moving vast amounts of uncompressed video data around (compression adds far too much lag) at the same time as you are keeping the NNs fed.I detailed the HW3 vs HW4 computing differences here. In terms of the actual FSD hardware (and not the UI stuff handed by Ryzen):

The CPUs went from 12 cores (3x4) 2.2GHz to 20 cores (5x4) 2.37 GHz, using the same Cortex-A72 cores with a moderate speed bump.

The NPU went from 2 NPUs to 3 NPUs.

Discussion: HW.4 Suite - Availability, retrofit, suitability etc.

There is basically no compatibility mode needed nor any sort of emulation layer needed. It's not like they actually changed architecture. A dumb way for HW4 to simulate HW3 is simply to shut down the extra cores and throttle the CPUs back down to the original.

The biggest differences remain in the cameras.

Crudely speaking (and there are in reality far more variables) you have an increase in camera resolution of 400%, but only a 150% increase in NN capacity. So if you dont do something smart HW4 is going to be a lot SLOWER than HW3 (and, in fact, I'm pretty sure Tesla ARE doing a few smart things).

Last edited:

Right, that's great theoretically, but if you recall, I kinda brought this all up because Elon chose to use the term emulation. It could be absolutely nothing, just a simple term he figured everyone could understand and HW4 may have been designed from the ground up to be in "HW3 emulation mode" efficiently. I agree that this would make the most sense.A heavy abstraction cost is usually incurred when the underlying hardware instruction set has to be emulated, but I doubt this is the case for HW4 vs HW3. More likely HW4 can natively run all the HW3 code and models, perhaps with stalls added to simulate HW3 latency, leaving much of its compute capacity unused. (And as mentioned, down-rezzing for the cameras, though this is cheap.)

[EDIT: Just read tronguy's explanation, which is excellent.]

willow_hiller

Well-Known Member

Similar threads

- Replies

- 69

- Views

- 6K

- Replies

- 80

- Views

- 11K